This is another important question we often encounter during the interviews. I have been struggling a lot to understand and finally did it with a classic example.

Parallel processing comes in picture when there is a requirement to process large bulk of rows without compromising the performance which might otherwise get impacted greatly with non-parallel based processing. We would try to understand this concept with an actual example where this concept has been used.

Requirement - There are 100,000 employees in the organization and many of them have more than one assignments (EMPL_RCD) hence the total number of rows in the PER_ORG_ASGN record collectively will be 120,000. Develop a program to update the primary job indicator flag for each employee in the table PRIMARY_JOBS.

Implementing Parallel Processing using Temporary Record with Application Engine

Step :1 Open the App DesignerStep 3: Create a record BN_AGE50_AET of type "Derived/Work"

Step 4: Create a new Application Engine program "BN_UPD_PRIM" in app designer.

Step 5: Open the Application engine properties, go to "Temp Tables" tab and assign all three temp tables. Give the instance count as 10.

Step 7: Build the temporary records BN_EMPLID_WRK, BN_EMPLID1_WRK and BN_JOB_WRK. App designer will create the number of instances for each of these temporary tables equal to given in the Program Properties -> Temp Tab - Instance count box which is "10" in our case which means the tables for each temp record will be created as below:

BN_EMPLID_WRK - BN_EMPLID_WRK1, BN_EMPLID_WRK2... up to 10 instances

BN_EMPLID1_WRK - BN_EMPLID1_WRK1, BN_EMPLID1_WRK2.... up to 10 instances

BN_JOB_WRK - BN_JOB_WRK1, BN_JOB_WRK2.... up to 10 instances

Step 8: In the MAIN section of app engine (Or create a new section) add a Step/Action of SQL type, then add below SQL in that.

This SQL will load all the employees into temp table instances BN_EMPLID_WRK1, BN_EMPLID_WRK2... etc, Please make sure that you are using the meta sql %Table with the temp table name.

Lets say we have 100,000 employees in PS_PERSON record and each employee has just one assignment in PS_PER_ORG_ASGN. Since we have instance count for the temp record to 10 so when the program is run, The selected 100000 rows will be divided into like 100000/10 = 10000 and each chunk of 10000 rows will be inserted into one of the available instances BN_EMPLID_WRK1, BN_EMPLID_WRK2 ...etc.

Please note that we don't have to worry about which instance a particular chunk is being inserted into instead, %Table metasql does that for us. However, Its not necessary that application engine will process the rows in exactly same way explained above, its just a logical explanation which is trying to convey that the number of selected rows i.e 100,000 will be divided and loaded into appropriate temp table instances to be processed simultaneously which is nothing but parallel processing.

Once above step is processed, the temp table BN_EMPLID_WRK has been loaded with large volume of data (1000,000 rows) of employees eligible to be processed. How many rows have been loaded in which temp table instance (BN_EMPLID_WRK1, BN_EMPLID_WRK2... etc) is none of our concerns.

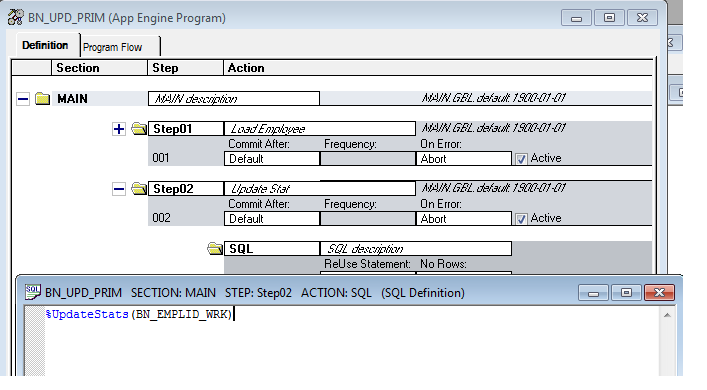

Step 9: Add below Step/Action to update the statistics of temp table BN_EMPLID_WRK for the indexes to improve the performance when the rows are selected from the instances of this table in further steps.

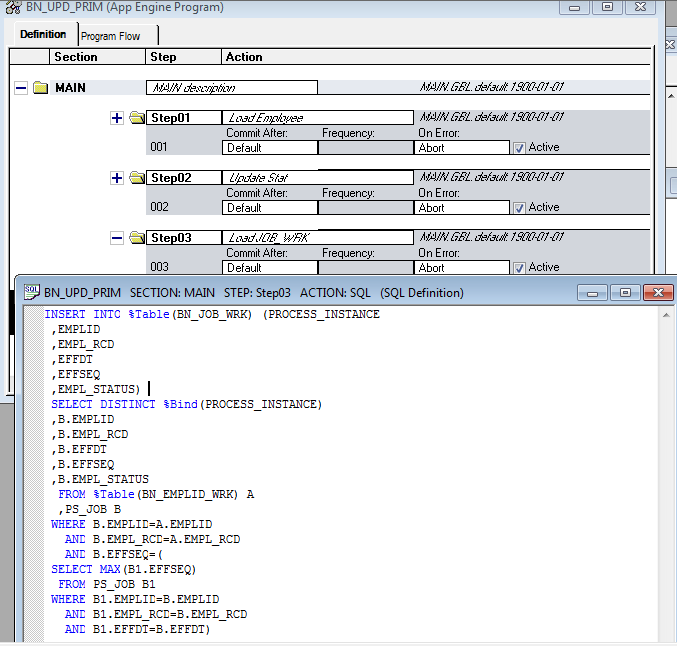

Step 10: Add below Step/Action to get the JOB data (EFFDT, EFFSEQ and EMPL_STATUS) for each employee loaded in BN_EMPLID_WRK, and insert into another temp table BN_JOB_WRK (BN_JOB_WRK1, BN_JOB_WRK2 ... upto 10 instances).

Here again as you notice, we just used %Table metasql with the table BN_JOB_WRK in the SQL which will take care as to how should be the allocation of rows for the instances BN_JOB_WRK1, BN_JOB_WRK2...etc.

Step 11: Add below Step/Action to update the statistics of temp table BN_JOB_WRK for the indexes to improve the performance when the rows are selected from the instances of this table in further steps.

Step 12: Add below Step/Action to select those employees loaded in the temp table BN_EMPLID_WRK who are all don't have more than one assignments (EMPL_RCD) for a benefit record number (BENEFIT_RCD_NBR), and insert into another temp table BN_EMPLID1_WRK (BN_EMPLID1_WRK1, BN_EMPLID1_WRK2...upto 10 instances).

Step 13: Add below Step/Action to update the statistics of temp table BN_EMPLID1_WRK for the indexes to improve the performance when the rows are selected from the instances of this table in further steps.

Step 14: Finally, mark the current EMPL_RCD as "Primary Job" in the table PS_PRIMARY_JOBS for the processed employees by joining all three temp tables.

Well, it's quite evident that the procedure to implement parallel processing appears simpler than we thought. However, if we try to compare it with the non-parallel way of addressing the same request then it would certainly help us understand the concept clearly.

Let's discuss how would it have been addressed if there was no mechanism called "Parallel Processing".

1) Record Definition - The number of records will be same but the record type for BN_EMPLID_WRK, BN_EMPLID1_WRK and BN_JOB_WRK will be "Table".

2) Since there is no temporary record created hence no need to assign the temp records in Program Properties -> Temp Tables. It won't be applicable.

3) No Need to use %Table metasql while referring to the records.

Everything else will remain exactly same. We also need to understand how different it will behave without parallel processing.

In this case, When we run the program, The system will store all the 100,000 rows into the table BN_EMPLID_WRK during the execution of Step 1 explained earlier unlike, into various temp table instances BN_EMPLID_WRK1, BN_EMPLID_WRK2..etc. So, when execution goes further then all the 100,000 rows are fetched from one table and processed but in case of parallel processing the chunk of rows i.e 10000 if fetched from each temp table instance and processed all simultaneously.

Hence, If time taken to complete the processing is 20 Mins then it will be 20/10 ~ 2 to 5 Mins if parallel processing is used.

How is the data logically divides here?

ReplyDeleteThis SQL will load all the employees into temp table instances BN_EMPLID_WRK1, BN_EMPLID_WRK2... etc, Please make sure that you are using the meta sql %Table with the temp table name.

DeleteLets say we have 100,000 employees in PS_PERSON record and each employee has just one assignment in PS_PER_ORG_ASGN. Since we have instance count for the temp record to 10 so when the program is run, The selected 100000 rows will be divided into like 100000/10 = 10000 and each chunk of 10000 rows will be inserted into one of the available instances BN_EMPLID_WRK1, BN_EMPLID_WRK2 ...etc.

This SQL will load all the employees into temp table instances BN_EMPLID_WRK1, BN_EMPLID_WRK2... etc, Please make sure that you are using the meta sql %Table with the temp table name.

ReplyDeleteLets say we have 100,000 employees in PS_PERSON record and each employee has just one assignment in PS_PER_ORG_ASGN. Since we have instance count for the temp record to 10 so when the program is run, The selected 100000 rows will be divided into like 100000/10 = 10000 and each chunk of 10000 rows will be inserted into one of the available instances BN_EMPLID_WRK1, BN_EMPLID_WRK2 ...etc.